“Upgrading” the HBA on My Energy-Efficient NAS with a M.2 PCIe HBA from IOCREST

I bought an M.2 HBA, while it is awesome for me, it may not be for everyone. Click to find out more about this miraculous device that turns your unused M.2 slot into an 8-port SATA HBA.

As you might have read, I retired my Dell R730xd not too long ago, and replaced it with a DIY 12th Gen i3-based system for my NAS needs. I know, the build isn’t perfect - in fact, I can think of many aspects that I can improve it upon. One that is most notable, I believe, it’s the aging SAS 2008 HBA that it uses.

Since this LSI HBA is a PCIe 2.0 x8 device, it has to occupy the only PCIe x16 slot that I have on the motherboard. This slot, being PCIe 4.0 x16, it is just wasteful to plug in an PCIe 2.0 based HBA. Some of you may suggest that I can plug it into the PCIe 3.0 x4 slot without sacrificing too much performance. I’d say you are right - however, the bottom PCIe 3.0 x4 slot on this motherboard leaves almost no clearance for me to install a fan to cool this HBA which generates lots of heat and indeed requires a good amount of airflow to ensure stable operation, so that option is out the window.

So, I started looking for a solution. After searching for quite awhile, I found this little guy on AliExpress:

This is the “IOCREST M.2 PCIe3.0 to 8 Ports SATA 6G Multiplier Controller Card”. It uses the M.2 PCIe x4 slot on your motherboard, then converts two of the PCIe 3.0 bus to two SFF-8086, then fans out to eight SATA ports, with four SATA ports each. No need to explain, this is the dream form factor for my application since I don’t need two M.2 PCIe storage, but I do have eight HDDs to drive.

I ordered this immediately, alongside with a PCIe 3.0 x1 dual port 2.5Gbe LAN card (review coming soon), without too much hesitation. After waiting patiently for two weeks, the parts arrived at my doorstep in a well-packaged box, and IOCREST also included two SFF-8086 to four SATA cables.

Introduction

The design is very simple. On the board, it has a JMB585 PCIe to SATA chip, and a JM575 Multiplier/Selector chip, eight blue LED status indicator, and two SFF-8086 plugs.

After some research, I’ve learned that the JMB585 is the HBA, while the JMB575 being a port-multiplier. This is a very common setup in server storage configuration - an HBA paired with a port multiplier. My old R730xd has a PERC card connected to a backplane, which is the port multiplier part. I am intrigued to see that this kind of configuration can be achieved in such a small form factor.

Nonetheless, nothing is perfect. While it provides 8 SATA 6Gb/s ports, it does come with compromises. In this technical document from JMicron, the manufacturer of both of these chips, the JMB585 is a PCIe 3.0 x2 to 5 SATA 6Gb/s chip. If it’s only five ports, where do rest of the three come from? The answer lies with the JMB575 chip, which acts as a multiplier by using one of the SATA ports that the JMB585 has, and multiply that to four more SATA ports.

So all in all, 5 ports are provided by the JMB585, with 4 ports usable to the user, then the remaining one port is multiplied by the JMB575, providing four more ports, giving a total of eight SATA 6Gb/s ports.

Inherently, this HBA only utilizes PCIe 3.0 x2, the theoretical maximum bandwidth is about 2 GB/s. If we take this and divide it by 5, then each port that the JMB585 has only gets a bit less than 400 MB/s. So, should we run an all-flash storage, this little guy won’t be viable, since it will just bottleneck the heck out of the four ports which JMB575 provides. However, I am using spinners, this is much less of a concern.

Installation

So, I started the swapping process. First, I turned off the TrueNAS Scale VM in my Proxmox host, then I removed the old HBA from the VM hardware, and shutdown the whole system. After pulling the old LSI HBA out, I swapped out the 256GB Samsung PM981 that’s in the M.2 slot, and replaced it with this IOCREST M.2 HBA.

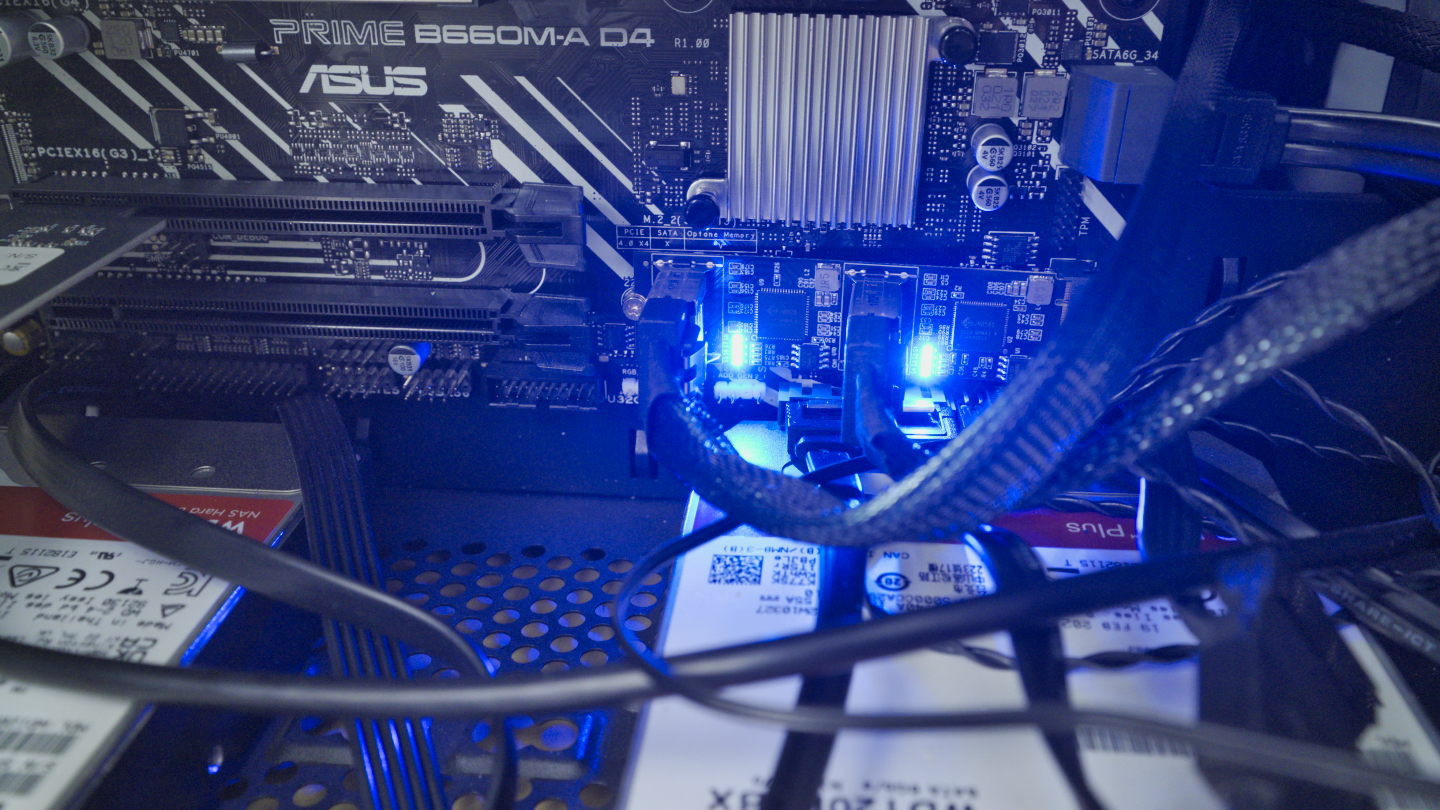

Then, I plugged in the SFF-8086 cables and hoping that TrueNAS will automatically recognize the disks. Five minutes later, the job is done, and the machine is back up running. Needless to mention, I’ve also taken this opportunity to install the PCIe 3.0 x1 dual port 2.5Gbe NIC from IOCREST. Out with the old, in with the new. As you can see, these status LEDs are extremely bright.

After Proxmox was booted up, I passed the JMB585 controller to the TrueNAS VM. Afterwards, I anxiously fired up TrueNAS Scale, and crossed my fingers. It did gave me some chills when the VM took more than 3 minutes to boot. But once it did, all of my vdevs and zpools are recognized correctly.

Performing a quick lspci command in TrueNAS Scale shell, we can see the HBA is correctly recognized by the system. Since JMB575 multiplier chip isn’t a PCI device, it didn’t show up in here.

I did not perform a performance test on this HBA since I don’t feel the need to do so. All I am trying to do, was to use a more reasonable form factor HBA to free up that PCIe x16 slot, and I think this little M.2 HBA did an awesome job. If anyone is curious as to how this HBA actually performs, leave a comment down below, and I will try to request a review sample from IOCREST since I don’t want to pull this thing out from my system.

More Thoughts on This HBA

For me, a good NAS system will have these following requirements:

- Energy efficient.

- Good networking

- 8 or more drives

- Balanced system configuration

The reasons as to why I retired the R730xd, are based on the point 1 and 4. The Dell has dual socket E5 Xeons, the whole system idles at 140W+. Although it has dual CPUs, the performance on those CPUs are easily beaten by modern CPUs like the AMD Ryzen 9 3900X, a 12-core, 24-thread part. The only thing that has going for the R730xd system is the expansion slots. E5 CPUs have lots and lots of PCIe lanes, and the server motherboard also provide up to 8 different slots.

Before jumping on the i3 NAS build, I was actually thinking about repurposing my Ryzen 9 3900X CPU that I replaced with Ryzen 9 5950X for that purpose. However, since it doesn't have a built-in GPU like Intel counterparts have, I realized that I won't have enough PCIe slots for my usage. First of all, we will need an HBA to drive the HDDs, there goes an x8 or x16 slot. Then, we will need networking, preferably 10G, so there goes an x1 or x4 slot. Finally, we'd need a GPU (for Ryzen), this also requires an x8 or x16 slot, and it has to be on the CPU PCIe lanes or it won't boot.

I could not find a mATX motherboard that has at least two x8 slots, nor I can find one with even x16, x4 and x4. So I gave up on the idea and went with Intel 12th Gen i3 just because it has an integrated graphics.

However, this M.2 HBA from IOCREST completely changes the game. For a NAS, I don't need the second M.2 PCIe SSD to begin with, and many AMD B550 motherboards provides two of them. So, instead of wasting that x16 slot for an HBA, I can use one of the M.2 PCIe slots for this purpose. That frees up the x16 slot for a GPU.

For example, take a look at this mATX B550 offering from AsRock. It has dual M.2 PCIe slots, one x16 slot, one x1 slot, and one x4 slot provided by the chipset, and it even has a on-board 2.5Gbe NIC. With this IOCREST M.2 HBA, I can do this build:

- CPU: Ryzen 9 3900X

- RAM: 32GB x2 3200 G.SKILL

- Mobo: ASRock B550M PG RIPTIDE

- SSD: Samsung 980 Pro 2TB (CPU M.2)

- GPU: EVGA 1080 Ti SC (PCIe x16)

- HBA: IOCREST M.2 PCIe to 8 SATA (Chipset M.2)

- NIC: Mellanox ConnectX-3 EN 10Gbe (PCIe x4)

- NIC2: IOCREST Dual Port 2.5Gbe (PCIe x1)

The whole system will be gold for a NAS and integrated cloud gaming given the sheer CPU and GPU power. During my other testing, I found out that the 3900X and 1080 Ti idles at about 50-60W, which is good enough for me. Adding 8 of the HDDs that idles at 5W, the whole system just pulls about 100W at idle. Sure, it is not a great improvement compared to the R730xd, however, the R730xd also can't compare to the 3900X for its single core performance, which a NAS and a cloud gaming server will need, or the sheer RAM bandwidth improvement from going from DDR4-2400 to DDR4-3200.